Five Ways to Spot a Spamouflage Disinformation Campaign

Spamouflage Survives Part 2/6: An overview of Spamouflage’s Coordinated Inauthentic Behavior

Miburo has spent the last eleven months documenting and observing a multi-platform disinformation campaign from Spamouflage, a disinformation actor aligned with the Chinese Communist Party (CCP). In our first article in this series, we gave an overview of new aspects of Spamouflage’s operations in 2021, including an expansion of messaging themes to include denial of human rights abuses in Xinjiang and attacks on the Taiwanese government.

In this article, we lay out the five tell-tale signs of a Spamouflage operation. Using these signs, we were able to determine with a high degree of confidence that Spamouflage is indeed the actor behind these operations.

#1. Shifting Identities and Shady Managers

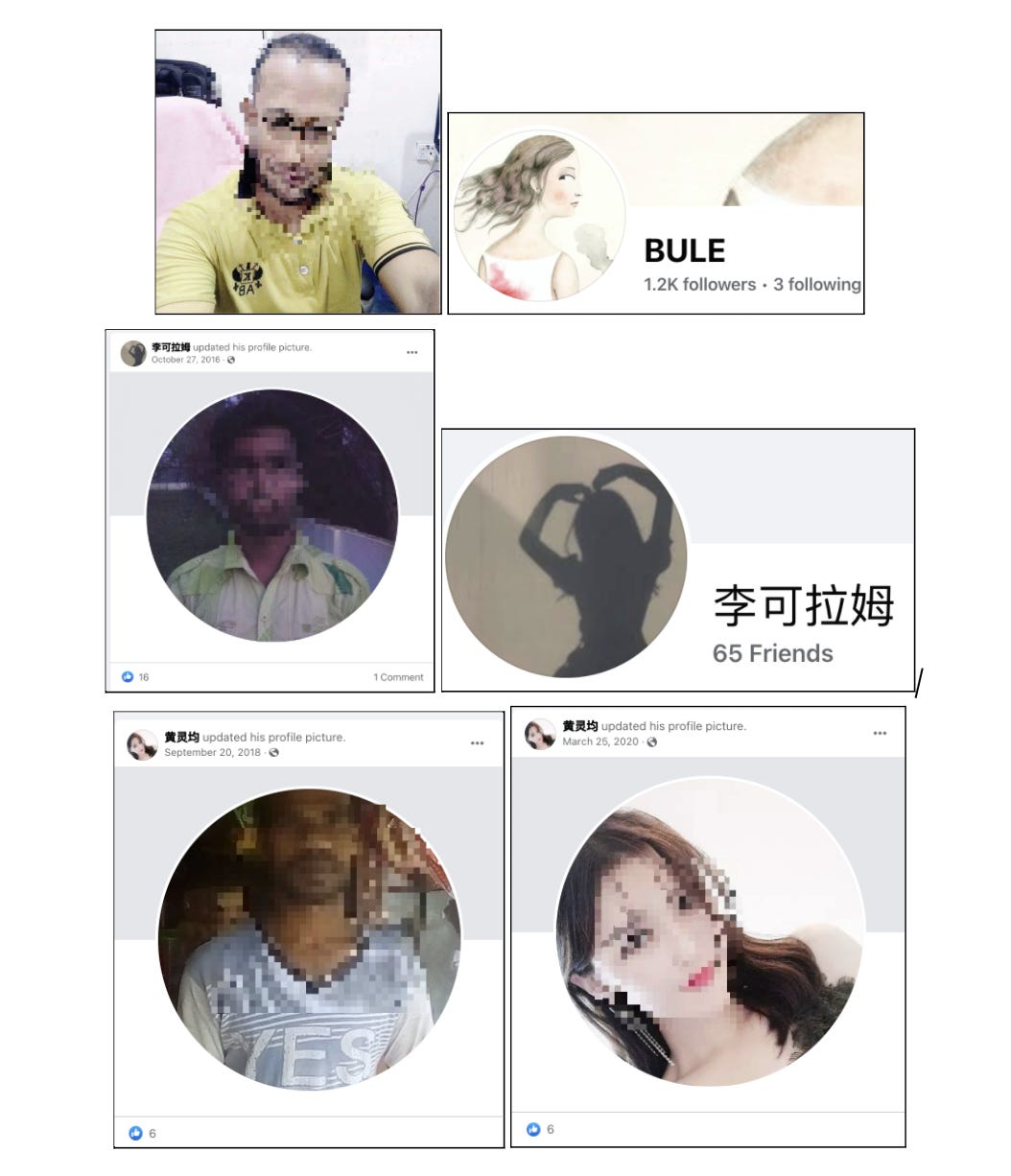

As in past operations from this actor, pages dominated the set as active producers of content. Many pages also often began with one name, such as a pseudo-random mix of consonants or a Bangladeshi name, and later changed names and began messaging in Mandarin or Cantonese. Other pages also adopted the known Spamouflage tactic of merging with another page to automatically gain its followers. Several pages also showed page managers in Bangladesh or China.

Other accounts in the set used the tried-and-true Spamouflage strategy of opening an account with a Bangladeshi man as the profile photo, and later changing it to an Asian woman or an anonymous profile photo.

#2. Attack of the Clones: Shared Names, Birthdays, and Faces

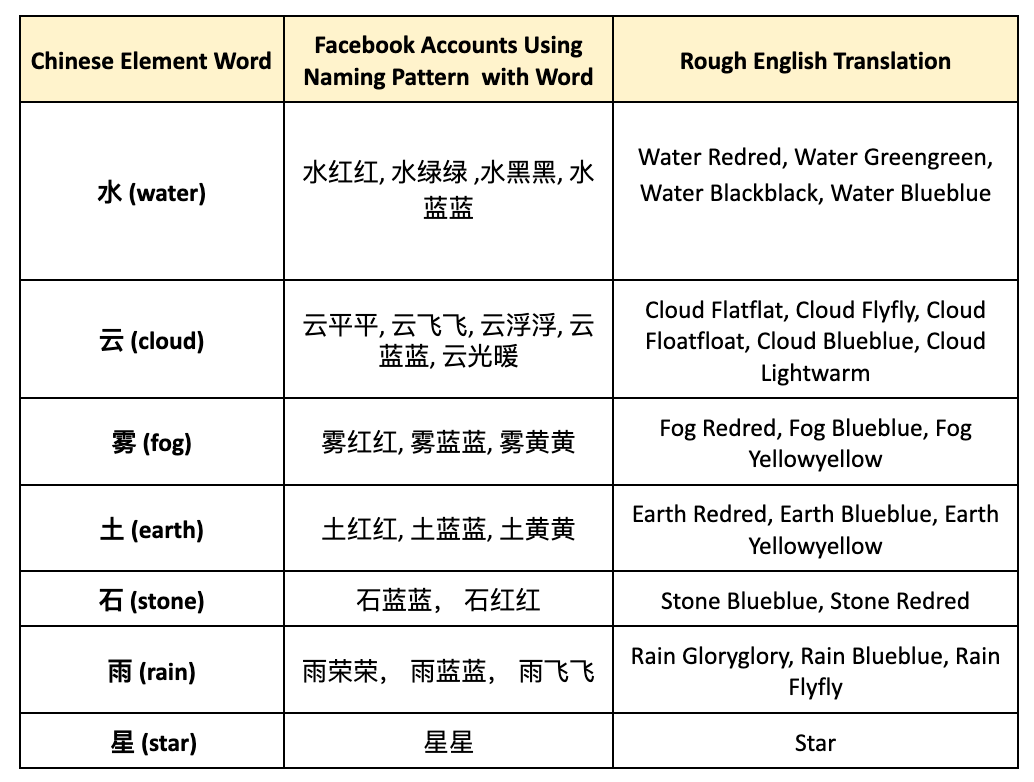

There were several common naming patterns in this set of accounts. A particularly common pattern was that of an element of nature (water, earth, cloud, etc.) combined with a reduplicated color (e.g. red red), or movement (e.g. “fly fly”).

The table below shows “elemental” formulaic names used by several Spamouflage accounts.

Other formulaic names were also common.

阿一, 阿二, 阿三, 阿四 (roughly equivalent to A1, A2, A3, A4)

Best-Games-4836, Best-Games-5597

美黃黃,美紅紅 (Beautiful Yellowyellow, Beautiful Redred)

克兰兰, 克军军, 克平平, 克文文

卡兰兰, 卡塔尔, 卡红红, 卡绿绿, 卡蓝蓝, 卡飞飞, 卡黄黄

军红红, 军绿绿, 军蓝蓝, 军黄黄 (Army Redred, Army Greengreen, Army Blueblue, Army Yellowyellow)

江丝丝, 江兰兰, 江水水 ,江江

Duplicate page names - Dfg, Fgh, 舒胡望, 许宝儿, 唐星冉, Asd, Sdf, Alison Jacobson, Larry, Jenny, Penny, Sanchez, 刘欢, 美猴王

Four-letter pseudorandom consonant cluster names - GGTY, Dhgf, Shxg, YYKL, KKBS, Zcgf

Simple nouns - Religion, Book1, Population, Building, Findings

Some assets also had patriotic Chinese names, such as “GREAT PRC”.

Dozens of accounts also used a formulaic naming pattern (shown in Figure 4) 圣西罗 shèngxīluó (San Siro) plus a series of 5 to 6 numbers – a naming pattern strongly indicative of being generated by a computer. San Siro is a soccer stadium in Italy, several pages in this set appear to try to gain a following by promoting sports content or using names that refer to sports.

Creation Date Overlap on Facebook and YouTube

Creation dates for Spamouflage assets on both Facebook1 and YouTube show a clear pattern of batch creation between September 2020 and March 2021.

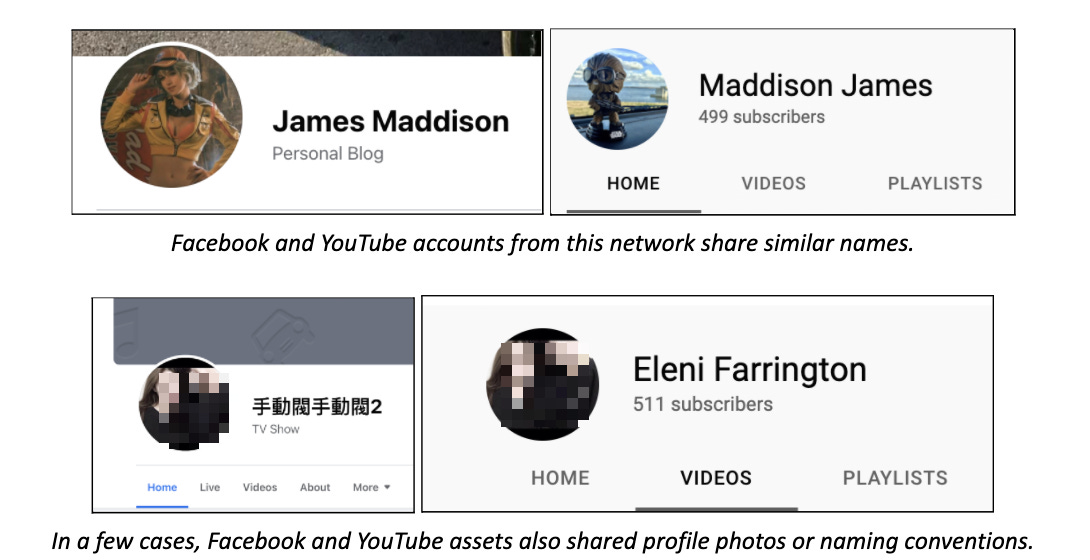

In keeping with its past operations, several of Spamouflage’s Facebook pages and accounts also use identical profile photos.

#3. Seeds and Sprouts

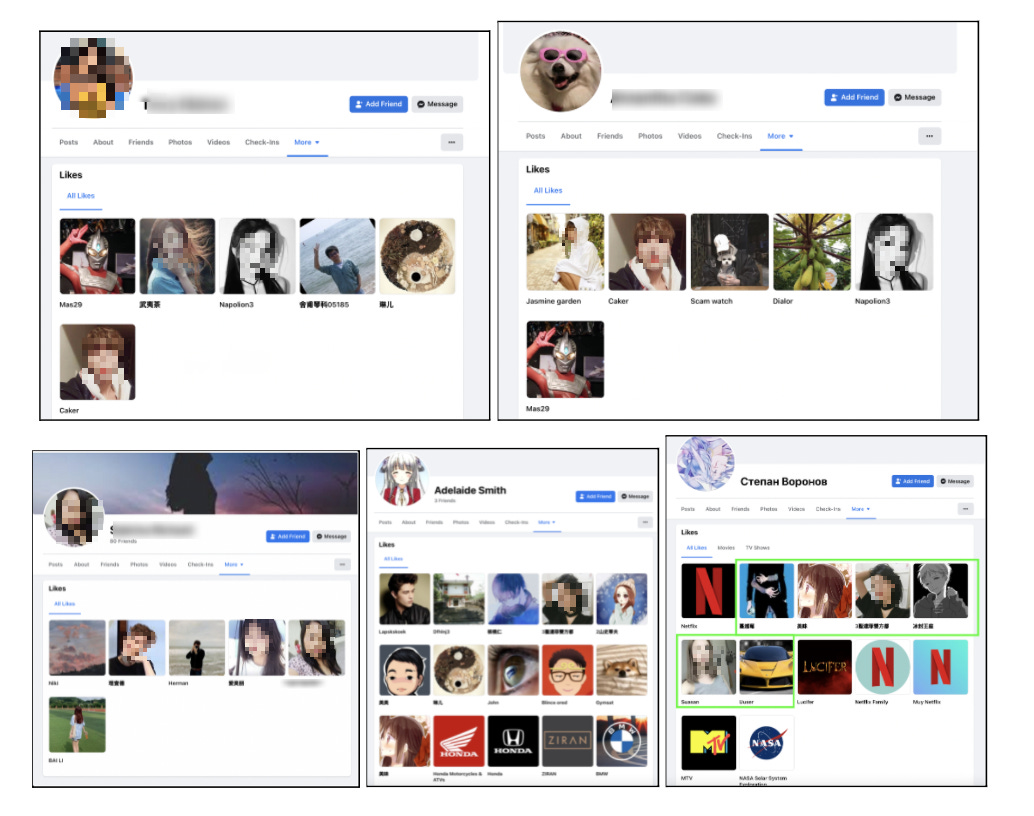

Several accounts in the set have no posts on their profiles, but like this network’s affiliated pages and the content they promote. A small sample of these accounts are shown in screenshots below2.

Generally, accounts in this network operate as either “seed” or “amplifier” accounts. Seeding accounts publish original content, rarely interacting with other users. Amplifiers, on the other hand, share, like, and comment on the seeds’ posts, rarely publishing their own content. Network visualizations of this phenomenon on both YouTube3 and Facebook follow below.

#4. Time and Time a GAN

Spamouflage also continues4 to use computer-generated photographs of humans for profile photos on Facebook and Twitter. These AI-generated photos are often referred to as GANs, an acronym for Generative Adversarial Networks, the algorithm used to create them. In early 2021, several of the accounts using GAN photos posted unattributed quotes from press statements from China’s Ministry of Foreign Affairs (MFA) on Twitter, celebrating the sanctions that the Chinese government placed on former Trump administration officials shortly after Biden took office.

5. Bot Behavior

Several accounts in this set bear signs of automation. Many of the amplifying accounts share and like the same content at the exact same time. Strangely, several accounts in the set appear to get their start posting quotes from The Moon and Sixpence, an early 20th century novel by W. Somerset Maugham. (Spamouflage assets have previously been documented using similar tactics, posting lifted quotes from Bram Stoker’s Dracula.)

Accounts also engaged in copypasta–posting the same copy-pasted message in different places. On some occasions, the accounts we observed would paste the same (likely automated) reply on the same post. Frequently these identical messages attacked Chinese dissident-in-exile Guo Wengui, one of the most frequent targets of Chinese IO.5

Want more? Read the rest of our Spamouflage Survives series here:

Part 1: Spamouflage Survives: CCP-aligned Disinformation Campaign Spreads on Facebook, Twitter, and YouTube

Part 4: Strait Deception: Spamouflage Spreads Propaganda and Stokes Tensions in Taiwan

Part 5: Spamouflage’s Ill Will: Anatomizing a Two-Year Pandemic Propaganda Campaign

Part 6: Emperor in the Ether: Spamouflage’s Authoritarian Attacks on Democracy and Journalists

Since Facebook does not publicly reveal the date an individual account was created, the creation date figures for Facebook are solely based on pages, which do publicly show their creation date.

Some of these accounts also like unrelated popular pages, such as BMW, Netflix, NASA, and MTV. When not sharing Spamouflage content, many pages in this set would share content from popular pages, such as the NBA, NASA, or the K-pop group BLACKPINK, presumably to attempt to gain visibility among regular users also following those pages.

Our methodology for generating these YouTube commenter graphs follows below:

Finding high-confidence assets on YouTube through Facebook - working backwards from YouTube videos cited by high-confidence Facebook Spamouflage accounts, we assembled a set of the most frequently cited YouTube channels. Judging by these YouTube channels’ content and behavior, we manually assessed whether they were also Spamouflage assets.

Extract commenters on these channels’ videos - we extracted over 3,000 comments on these suspected Spamouflage channels’ videos, and made a directed edgelist from this data (commenter → account commented on). This was our initial network graph, which consisted of 1,455 nodes and 3,644 edges.

Filtering - knowing that not all of these commenters would be Spamouflage assets, we used K-core reduction (with a k value of 5) to reduce the network down to the most densely connected nodes in the set. This filtered out commenters who only commented on one video - the resulting final network graphs consist of 256 nodes with 2,084 edges. We manually assessed these accounts as well, confirming that they were likely to be part of Spamouflage operations on YouTube.

Graphika has documented similar TTPs from Spamouflage in past reports: https://public-assets.graphika.com/reports/graphika_report_spamouflage_goes_to_america.pdf, https://public-assets.graphika.com/reports/graphika_report_spamouflage_breakout.pdf

See https://iftf.org/disinfo-in-taiwan, https://medium.com/digintel/welcome-to-the-party-a-data-analysis-of-chinese-information-operations-6d48ee186939, https://www.bbc.com/news/world-asia-china-58062630, https://www.aspistrategist.org.au/stopasianhate-chinese-diaspora-targeted-by-ccp-disinformation-campaign/